Paphos • Dec 11

How to Train Your Robot

A Practical Guide to Physical AI

From Hard-Coded Logic to Learned Skills

Positronic Robotics

My Journey to Robotics

Following the "Data Complexity" Curve

Google (Search Ranking)

Organizing the world's text.

WANNA (Acq. by Farfetch)

Computer Vision & AR.

Farfetch

Fashion Tech Platform.

Positronic Robotics

Physical AI.

Sergey Arkhangelskiy

Founder & CEO @ Positronic

The Trap of Explicit Programming

The world is too messy for 'If/Else'

The "Long Tail" Problem

- ⚠️ Lighting changes by 10%

- ⚠️ Object moves by 5mm

- ⚠️ Cable stiffness varies

"High-level reasoning is easy. Low-level motor skills are hard."

End-to-End Learning

Pixels $\to$ Actions

Explicit Code

Manually programmed logic.

Learned Policy

Shown what to do.

What Does the Model See?

Multimodal Fusion

Inputs ($o_t$)

- Vision: RGB Images

(Wrist + 3rd person) - Proprioception: Joint angles, Gripper 3D position, Gripper width

- Language: "Put the red block on the plate"

Outputs ($a_t$): Action Chunking

Predicting the Future.

$\pi(o_t) \to \{a_t, ..., a_{t+k}\}$

Ensures smoothness & temporal consistency.

The "Robot Internet" Doesn't Exist (Yet)

We have to build the dataset manually

Teleoperation (The Gold Standard)

Humans "puppet" the robot to collect ground-truth data.

Quality is king: "Garbage In, Garbage Out."

- Device: Leader Arms (ALOHA) or VR (Meta Quest 3).

- Costly but necessary for manipulation.

Sim-to-Real (RL)

Training in physics engines (Isaac Lab, MuJoCo).

Great for locomotion, hard for contact-rich manipulation.

Quality > Quantity

Insights from Generalist Models

Active Data

Volume: Tiny

"The Body"

Passive Video

Volume: $\infty$

"The Brain"

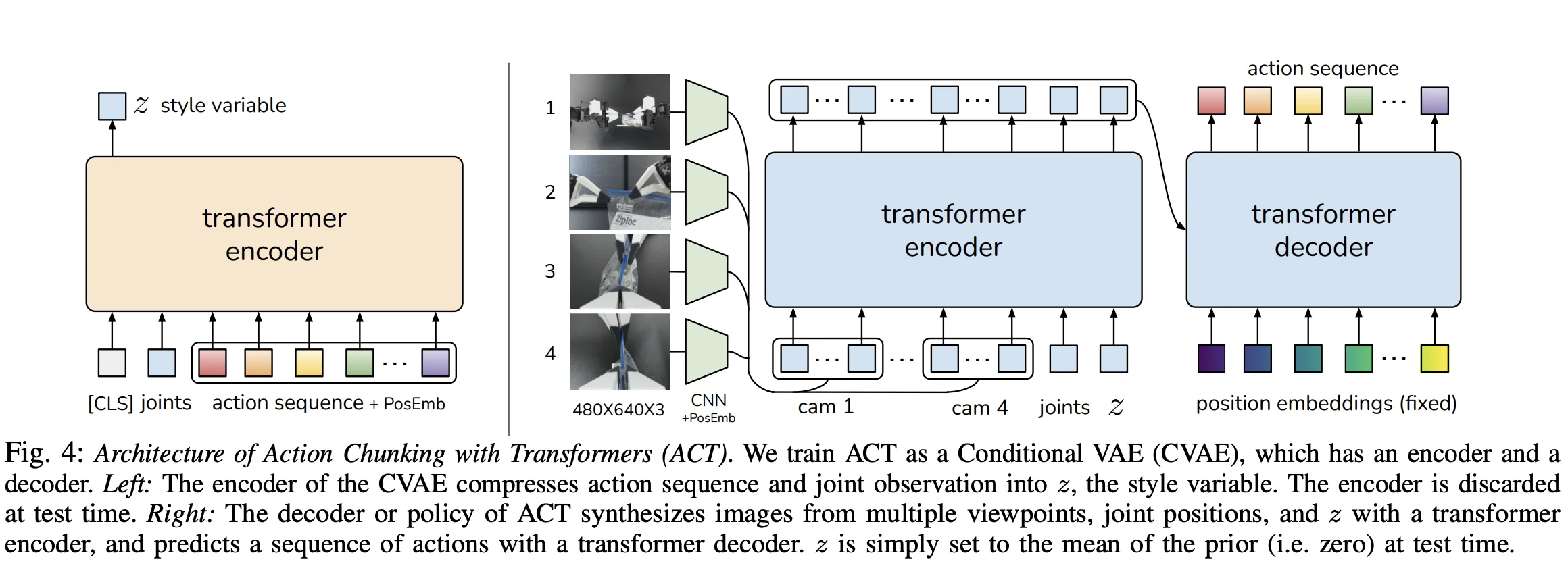

ACT: Action Chunking with Transformers

Tony Z. Zhao et al. (2023)

Uses a CVAE (Conditional Variational Autoencoder) to model multimodal action distributions, handling the inherent uncertainty in human demonstrations.

Key Idea: Action Chunking

Instead of predicting one step at a time, predict a fixed sequence (chunk) of actions ($k \approx 100$). This drastically reduces "compounding errors" (drifting off course) and produces smooth, coherent motions.

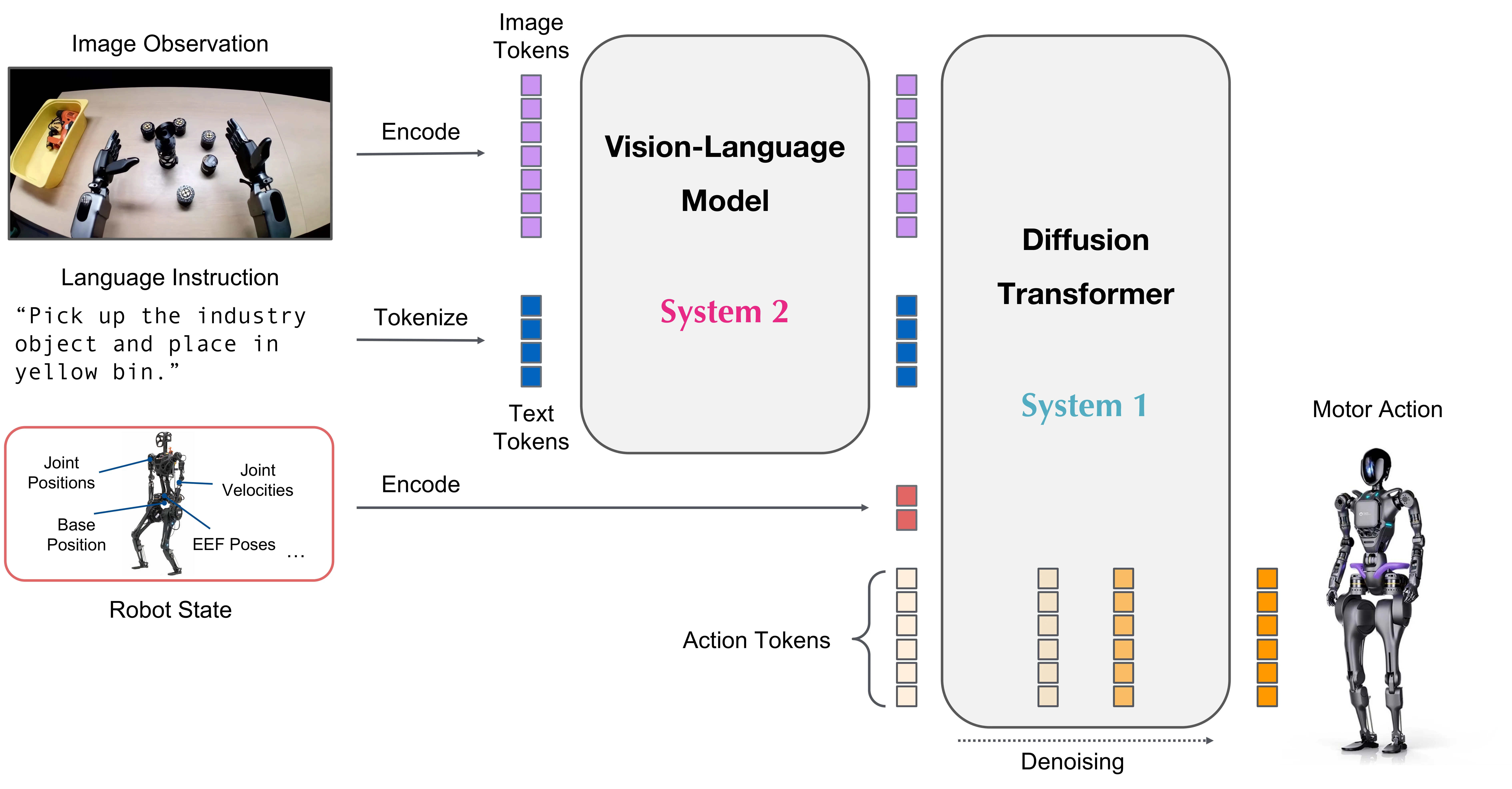

Project GR00T

NVIDIA (2024/2025)

Designed specifically for humanoid robots to be general-purpose assistants.

Key Idea: Dual-System Architecture

Inspired by "System 1 vs System 2" thinking.

System 2 (Slow): A VLM planner reasons about the task and goals.

System 1 (Fast): A high-frequency policy executes the motor skills.

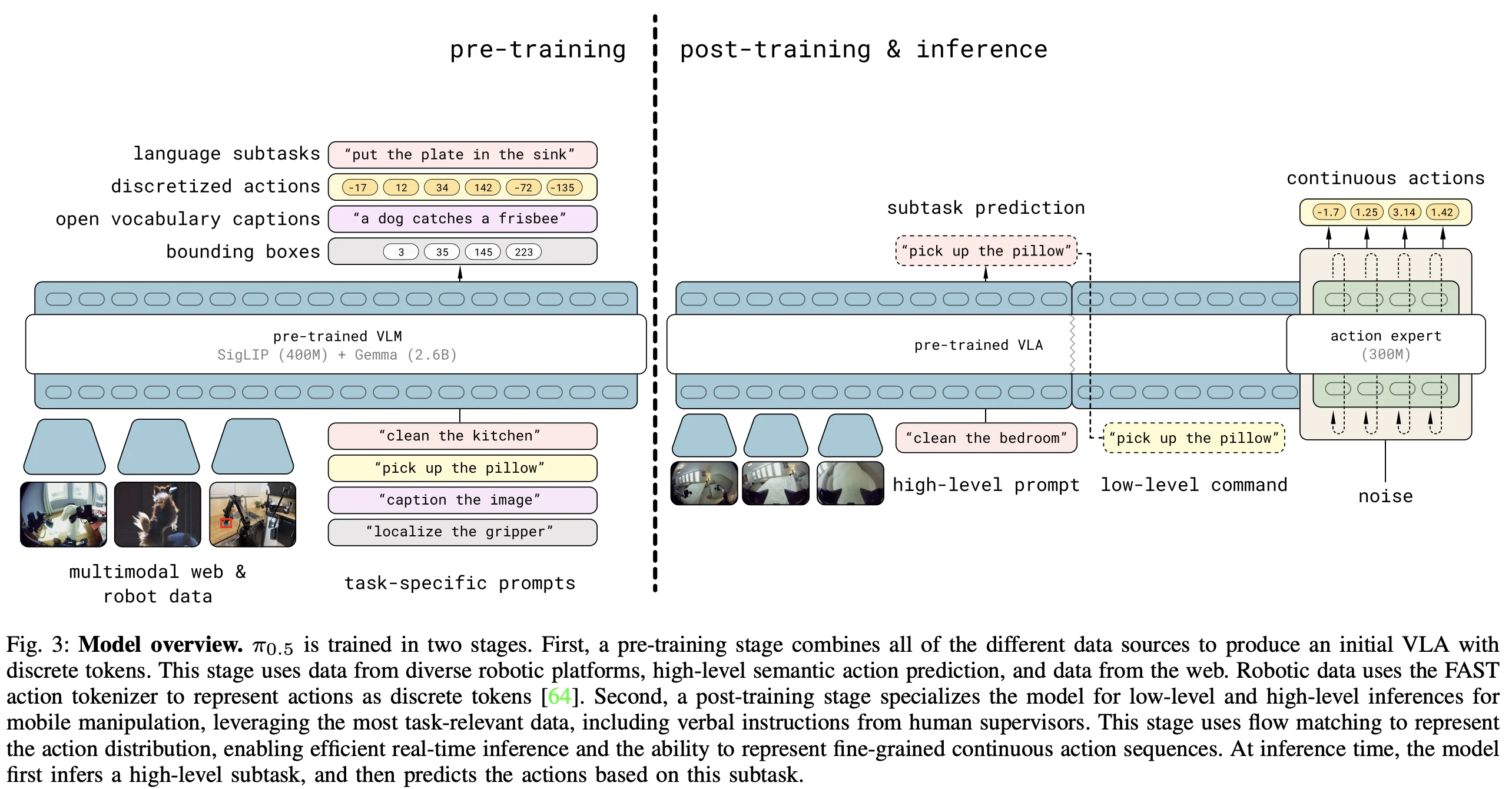

$\pi_{0.5}$

Physical Intelligence (2024/2025)

A true VLA (Vision-Language-Action) foundation model. Pre-trained on 10,000+ hours of diverse robot data (OXE + Proprietary).

Key Idea: Flow Matching

Uses Flow Matching (a simpler, faster alternative to Diffusion) to generate continuous action trajectories. This allows a single "brain" to control many different robot bodies by learning a shared physical understanding.

The "Zero-Shot" Myth: The Generalization Gap

Why "Generalist" models still struggle in the real world

1. The Reality Check

The Test: We ran a Droid-tuned model on a Droid robot.

The Result: Failure. Even with identical hardware, the policy broke.

2. The Root Causes

Data Starvation: We lack the data scale to learn generalized physics (friction, mass).

The RL Gap: Robots cannot safely "practice and fail" in the wild to self-improve.

3. The Verdict

Fine-Tuning is Mandatory.

Foundation models give you the "syntax" of movement; local data gives you the "semantics" of the task.

Positronic: The Toolset to "Train Your Robot"

A Python-Native Stack for the Full Lifecycle

1. The "Glue"

We bridge the gap between raw hardware drivers and high-level training libraries.

A unified OS: Collect $\to$ Manage $\to$ Train

2. The Workflow

- Collection: Accessible teleop via mobile / VR.

- Orchestration:

pimm&dataset. - Training: Native OpenPI, LeRobot integration.

3. The Vision

We handle the infrastructure plumbing so you don't have to.

You focus on the data and the policy.

Collection

iPhone / WebXR

Training

LeRobot / OpenPI

Real World

Robot Execution

The Future is Physical. Build It With Us.

The "Web 1995" Moment for Robotics

1. The Barrier is Gone

- Hardware: Build an arm for <$200, or dual-arm for <$5,000.

- Software: Positronic is Open Source & Python-native.

- Data: Collect it with the phone in your pocket.

2. Your Next Steps

- Star the Repo: Explore code, try WebXR teleop.

- Join the Hub: Building the community here in Paphos.

- Start Collecting: We need your data & experiments.

Scan to Join Discord & GitHub

Sergey Arkhangelskiy

Positronic Robotics